Over the years, there have been many attempts to contrast parallelism and concurrency, as they relate to computation. Two fairly recent examples (from a quick google search) are:

- Guy Steele Jr, How to Think About Parallel Programming: Not! (video), from 0:29:45 to 0:30:47

- David Liebke, "From Concurrency to Parallelism: an illustrated guide to multi-core parallelism in Clojure"(pdf), the first Clojure Conj., slide 2

Is there justification for this stance? Oxford American Dictionary says parallel (computing) means "involving the simultaneous performance of operations", and concurrent means "existing, happening, or done at the same time". With such similar definitions, it's no wonder that these terms get confused, but while I can understand attempting to clarify their differences, they are not mutually exclusive. In fact, if operations are parallel (simultaneous), they are also necessarily concurrent (happening at the same time).

It's the other cases that apparently confuse, where operations are concurrent but not parallel. Simply put, if many activities overlap in time, at some time scale, they are concurrent, but if they cannot act at precisely the same time because (say) some or all of them are competing for resources, then they can't all work simultaneously (as they take turns accessing the resource), and are thereby not (technically) parallel. The most clear and common case of this is when there is but one processor or person (considered a resource, if you like) available to carry out a set of concurrent activities. That processor or person must then advance one activity at a time, or perhaps time-slice or multitask to move them all forward in stages. Some people consider Petri Nets to embody this concept of concurrency, because one person is often employed to play the token game, even if multiple transitions could technically fire simultaneously. Also, the transitions in Petri Nets fire atomically, which can be interpreted to mean that each one takes no time, thereby precluding multiple from happening at any one at a time (to some level of precision).

It's the other cases that apparently confuse, where operations are concurrent but not parallel. Simply put, if many activities overlap in time, at some time scale, they are concurrent, but if they cannot act at precisely the same time because (say) some or all of them are competing for resources, then they can't all work simultaneously (as they take turns accessing the resource), and are thereby not (technically) parallel. The most clear and common case of this is when there is but one processor or person (considered a resource, if you like) available to carry out a set of concurrent activities. That processor or person must then advance one activity at a time, or perhaps time-slice or multitask to move them all forward in stages. Some people consider Petri Nets to embody this concept of concurrency, because one person is often employed to play the token game, even if multiple transitions could technically fire simultaneously. Also, the transitions in Petri Nets fire atomically, which can be interpreted to mean that each one takes no time, thereby precluding multiple from happening at any one at a time (to some level of precision). In addition to the computing sense, the term "parallel" also has a geometrical definition, "side by side and having the same distance continuously between them". If one draws a space-time diagram of a set of activities, and they form parallel (adjacent) line segments in the geometrical sense, then those activities are parallel in the computing sense. It implies that at some points in time (at least), the events are all active at the same time, though at different places (points in space). This highlights an advantage of parallelism over just concurrency: To keep the individual events each going, to get as much done in as short a time as possible. And it illustrates how to accomplish that: By having the different activities at different places, and interacting as little (i.e. being as independent) as possible.

In addition to the computing sense, the term "parallel" also has a geometrical definition, "side by side and having the same distance continuously between them". If one draws a space-time diagram of a set of activities, and they form parallel (adjacent) line segments in the geometrical sense, then those activities are parallel in the computing sense. It implies that at some points in time (at least), the events are all active at the same time, though at different places (points in space). This highlights an advantage of parallelism over just concurrency: To keep the individual events each going, to get as much done in as short a time as possible. And it illustrates how to accomplish that: By having the different activities at different places, and interacting as little (i.e. being as independent) as possible.But now to get serious. Since concurrency is a precondition to parallelism, parallel programming is necessarily concurrent programming. And in common usage, although atomic actions (e.g. transactions) can be considered abstractly to take no time, in reality they do take time and can thereby be parallel. Plus, regardless of how one implements parallelism, virtually all parallel activities share some resources in a mutually-exclusive fashion, so using that as a basis for differentiating concurrency and parallelism is not as definitive as it might at first seem. (Parallel activities which don't share resources are rare enough that they merit their own terminology, like "embarrassingly parallel".) In fact, it's worse than that: Occurrences at different places (e.g. on different processors) are (relativistically speaking) operating relative to different clocks, so simultaneity is not clearly and objectively defined.

But even assuming that one comes up with objective definitions of parallelism and simultaneity, etc., to delineate some concurrent programs as parallel ones, what features should a parallel programming methodology have over a concurrent programming methodology? And moreover, what would the advantages and disadvantages of building parallel programs be, as opposed to concurrent ones? I'll address that in my next blog entry.

2 comments:

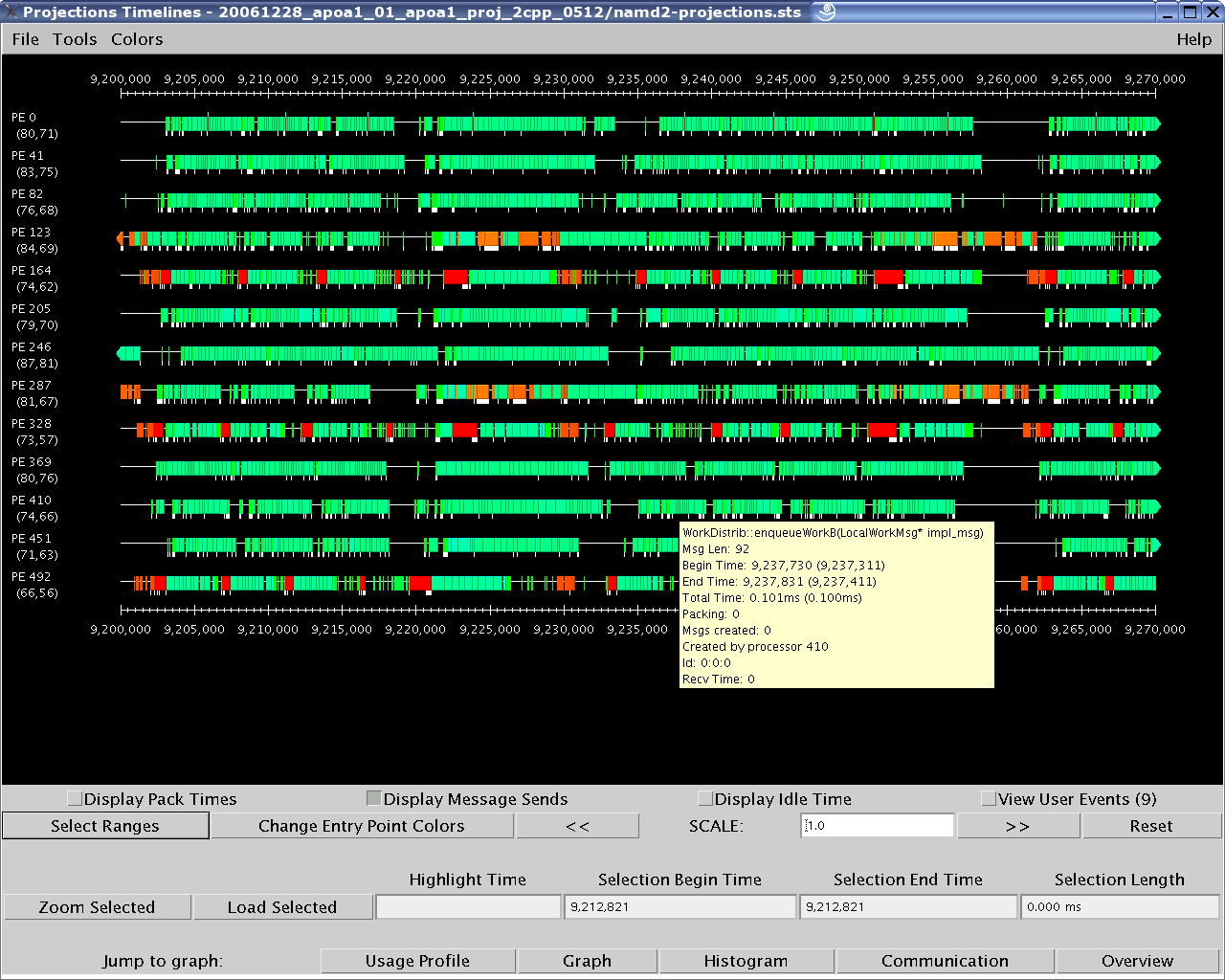

It seems to me that an indicator showing degree of parallelism while executing a concurrent program could be quite useful to the designer. Am I wrong, Dave?

As long as the programmer is responsible for embedding/scheduling the application on the platform, and especially if there is one intended target platform (or only a few), then yes, it's undoubtedly useful. It's the same mindset as programming in assembly language to make best use of the arithmetic units or to prevent pipeline bubbles using predictive branching on a particular chip. There will always be a market for such "bare metal" computing. But when the number of potential platforms goes up and/or the target platform goes out of the programmer's control (as is unavoidable in "the cloud" or if the application is to last any length of time), it is an exercise in futility. Maximizing total concurrency becomes the goal, to be potentially automatically squelched for platforms allowing less (see "variable granularity").

Post a Comment