I've been wanting to explain here why I get excited about ScalPL (Scalable Planning Language), to compare it with other approaches, etc. But to do that, I first need to assume that the reader knows what ScalPL is, even those who haven't read

the book. Fortunately, ScalPL can be separated into its foundational (fairly simple) computational model, called F-Nets (short for function networks), and then the other stuff built on top of that (object-oriented model, arrays, data concurrency, etc.), and I really only need to explain F-Nets to convey many of ScalPL's merits. I'd like to just convey the raw "what" behind F-Nets in one post (here), leaving the "why"s to become clearer in later posts. Although I presented most of the facets of F-Nets

a month ago from a different angle, I'll subsequently use this post as the definitive "quick and complete description" of F-Nets for future reference. So without further ado, in a dozen paragraphs...

An F-Net consists of two main kinds of entities:

Resources and

tactics. A resource, represented graphically by a rectangle (sometimes with other decorations), is a container -- it is capable of holding something static, which is (unsurprisingly) called its

content, or more technically, its

content state. For computers, you can think of a resource roughly like a variable, and its content as its current value. So, each has an

initial content state (either declared or default), and a

content domain (like a data type) which describes the kinds of content it can hold.

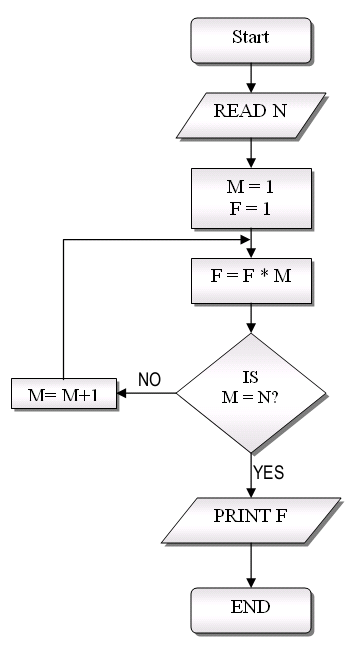

A tactic is a description of what to do to some resources, so it can be considered as a program (subprogram, function, method, etc.). And like a program, which can be run (or executed) to become a process (an active entity "doing its thing"), a tactic can be

activated (executed) to become an

acton (also an active entity "doing its thing").

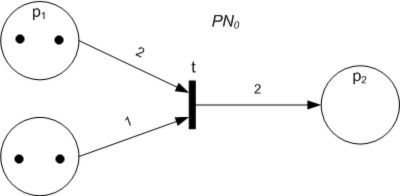

A construct called a

context, represented graphically by a circle, signifies an association between a tactic and a set of resources, by labeling the circle with the name of the tactic, and drawing lines from the circle to the rectangles representing those resources. Multiple contexts in the F-Net can be labeled with the same tactic name. The connecting lines, called

role bindings, are uniquely labeled (explicitly or implicitly, by labeling the resource instead), and these labels/names are used by any acton in that context (i.e. resulting from activating the associated tactic) to designate the resources. The behavior of an acton -- i.e. the alterations it makes to the content of its resources via these role bindings -- is completely determined by the tactic from which the acton springs (i.e. the tactic's description of what to do) and the original contents of those resources when the tactic activates into an acton. In other words, a tactic can be regarded as a function which translates/maps the contents of its resources (as identified by those role binding names) when it activates, to the final contents of those same resources when the activated tactic (i.e. acton) finishes. And since a tactic can be regarded as a function, then a context can be regarded as specifying an application of that function relative to a specific set of resources. And an acton can be regarded as an evaluation of that application of that function.

Technically, it's possible to get by without specifying much more, just letting the tactics activate atomically (e.g. one at a time) randomly or continuously. In fact, what's been described here so far is already very similar to a computational model developed years ago by

K. M. Chandy and J. Misra called UNITY (or similarly Dijkstra's Guarded Commands) where the guards would be built into the tactics. However, restricting/specifying the circumstances under which each tactic can activate is generally really useful for both understanding and efficiently implementing the F-Net, so there's another piece of the puzzle: In addition to content state, each resource also has another changeable attribute, called its

control state, represented as a color, initially green for each resource. And, each of the role bindings (lines) connecting a context to a resource also has an associated set of (static) colors, represented as colored dots (called

access dots) shown between the end of role binding and the resource rectangle. Now the

activation rule is: A tactic can activate in a context if and only if all of the context's resources have a control state matching one of the access dots on the role binding connecting that resource with the context. And, when a tactic does activate, in addition to accessing (e.g. observing, updating, or replacing) the content of its resources, the acton also assigns a new control state (color) to each resource, thereby (perhaps) allowing other tactics (e.g. associated with other contexts) to activate.

That covers when a tactic

can activate into a tactic, but

must it ever do so? The

liveness rule answers that question: If a tactic

can activate, then it

must, within a finite amount of time --

or, another tactic using at least one of the same resources (and meeting the normal activation criteria) must. In other words, if something can happen, it will/must, as long as something else doesn't interfere.

That is the essence of F-Nets, but there are a few other notations and minor rules which make them more portable, easily understood, and efficient.

- First, we put arrowheads (called permissions) on all role bindings to designate whether an acton in that context might observe/read the contents of that resource (arrowhead on the circle/context end) and/or will always replace/rewrite the contents of that resource (arrowhead on the resource end). A role binding with no arrowheads means that the acton won't touch the content state (though it will still assign it a new control state), and arrowheads on both ends signify the other options -- i.e. that the acton might only change parts of the control state, or might only sometimes replace the entire control state. The four combinations of arrowheads are called observe, replace, nodata, and update permission, respectively (i.e. context end, resource end, neither end, both ends).

- Then, just as arrowheads help to represent what the actons might do to the resources' content states, we use colored dots inside of the resource rectangles to represent what the actons might do to their control states. That is, near where each role binding connects to the rectangle (with access dots), we put one or more colored dots (called, unsurprisingly, new control state dots) inside the rectangle to represent the new control states that the corresponding acton might assign to that resource. It is also common to add a legend inside the left end of the resource rectangle showing each color that the resource might ever assume (i.e. its control domain) and what that color symbolizes.

So now, just by looking at the F-Net, you can tell, to a large extent, how each acton might affect, or be affected by, the content and control states of the resources to which it is bound (attached) by role bindings. The control states make an F-Net act very much like a

Petri Net, in that regard.

But there is one more loose end. If tactics can be considered as programs or functions (specifically what theorists call partial recursive functions), complexity theory tells us that we may not be able to tell, with any amount of analysis, whether an acton (once it has been activated from a tactic) will ever finish accessing the content of any particular resource, or assign it a new control state. (This is true even if the acton has already been cranking away for an arbitrary amount of time.) This messes up the idea that we can activate just one tactic at a time: If one gets stuck, we'd never get around to activating any other tactics, but we might also never be able to know if we can just give up on it, because it might eventually do more if we just give it more time.

The essence of getting around this issue is by simply considering a resource which an acton never finishes with as having a special control state (called

bottom or ⊥) represented by no color at all (often shown as black). Because its can be so hard (or impossible) to figure whether any particular acton will eventually finish accessing any particular one of its resources, it is also generally assumed that any resource could end up with this bottom control state, so it's as though there is an implicit bottom (black) dot added to every set of new control state dots. However, technically speaking, whether an acton will or will not finish up with any particular resource is

still completely determined by its tactic and the initial content of its resources, so even with this so-called augmented control domain, we can still at least abstractly consider each tactic as a (total) functional mapping. And we can freely allow one tactic to activate even before others have finished, as long as we don't let a subsequent tactic activation use a resource that a previous one (i.e. acton) hasn't finished with yet.

And that brings us to one final rule, called the

predictability rule, which greatly increases the efficiency and portability of F-Nets in common cases. It says simply: If all of the new control state dots for a role binding have the same color, and that role binding has either observe or nodata permission (i.e. there is no arrowhead on the resource end of the role binding), then we call the role binding

predictable, which we take to mean that there is

not an implied new control state dot of bottom. That is, predictable role bindings always finish up. And because they always finish up, and they don't alter (update or replace) their content state, and they have only one choice for new control state, we know the final content and control state of the associated resource the moment the tactic is activated, even

before it has finished up.

OK, that's F-Nets in a nutshell. There are other things I could get into, like just how to express tactics in traditional programming language, or how to represent F-Nets textually. There are also other constructs and abstractions: For example, role bindings really represent (surprise!) bindings for things called roles, and there are ways to a make role bindings branch, etc. But relatively speaking, those extras are all just window dressing. F-Nets serve as only the most basic foundation of ScalPL, which altogether has much more functionality, power, and expressiveness than F-Nets, but F-Nets alone are plenty enough to explain many of the advantages of ScalPL over many other approaches. And now onto the beauty of this approach...

As last year, GPUs were a big topic of discussion, but this time, much of the novelty, magic, and infatuation seemed to have worn off, leaving more practical "how to" questions and concerns. The other big talk of the conference (for many of us) was "exascale", the challenge being mounted by the government (DOE/NNSA) to build an exascale computer by 2020 or so.

As last year, GPUs were a big topic of discussion, but this time, much of the novelty, magic, and infatuation seemed to have worn off, leaving more practical "how to" questions and concerns. The other big talk of the conference (for many of us) was "exascale", the challenge being mounted by the government (DOE/NNSA) to build an exascale computer by 2020 or so.