If it's between many words and a few visual components, you and your audience will probably prefer the latter. And yet -- even in this age of tablets, multimedia, gesture and voice recognition, and high-bandwidth connections -- such pictures are a rare medium for communicating our intentions to a computer, regardless of field. Visual programming languages (true ones, not "visual basic", for example) have generally not fared well.

It's true that language processor (compiler) folks have developed most of their techniques and formalisms in the textual realm, but that's hardly an excuse or reason. I'm talking here about the computer-human interaction: If it's formal enough, establishing another internal representation as text (or other bit sequences) is not difficult. And graph grammars have been around for decades.

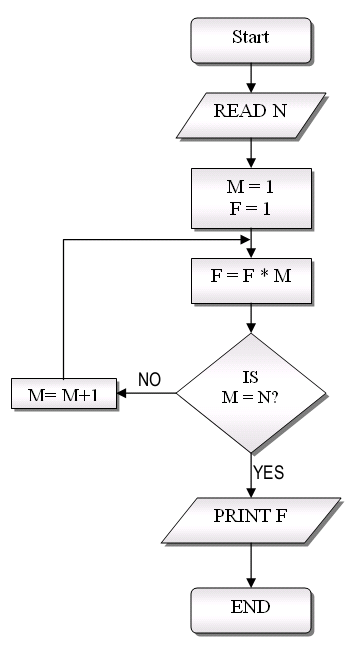

So when does it make sense for a human to use visual components related in 2 or 3 dimensions to convey intent to a computer or other humans? When I took my first programming class in 1972, we were admonished to use flowcharts before writing our programs, to envision the control flow. Back then, once you encoded that into a program, the control flow became severely obscured -- represented by the association between a symbol (usually a line number) in the "goto" statement and that same symbol used elsewhere as a label.

So, we were also told to (somehow) keep our flowchart with the finished program, for later reference: Virtually impossible. Since then, we have found ways to convey about as much information about the control flow in the program itself, using indentation -- so-called structured programming -- and flowcharts are now très passé. A success of software engineering!

Even so, when detailing data flow (how data moves) within a computer program, we almost always use the same "spaghetti code" symbol-matching tricks, but now based on a variable name (instead of statement labels) to establish a relationship between previous definition and next use. In imperative languages, data and control flow are managed separately (with variables for one, control constructs for the other), and programming is the art of getting them to work in tandem to produce a desired result.

And when it comes to parallel processing, we traditionally add yet another set of paths for both control and data flow between processes, using yet another layer of associative matching in either message passing (tag/type and process ID) or shared memory (address or name of shared variable or lock). And the results of those communication matches are entirely dependent upon the confluence in the control and data flows of the individual processes/threads, to the point that the communication matches may (commonly) be non-deterministic.

Dataflow languages attempted to simplify this mess by unifying control and data flows, allowing computation (i.e. control) anywhere that the necessary data appeared (became defined) through fixed channels/pipes. While this suggests the potential use of visual representations (graphs) to encompass both control and data (i.e. arrows representing fixed data pipes between nodes representing potential filters/transforms/computations), it's generally infeasible because of the sheer number of such dependences: Every computation result is conceivably another opportunity for data/control transfer. So, even in this case, associative matching on variable names was used, to convey (now) both data and control dependences. Hardware to facilitate it dynamically became something of a joke, due in part to the bottleneck caused by this associative matching.

Alright then, how about increasing the granularity of the dependences? Increase the size of the data objects flowing (technically "data" not "datums"), thereby decreasing the number and complexity of the dependences, perhaps even to the point that visual representations become feasible again, and shrinking the associative bottleneck. That does indeed solve some of the problem. But it still suggests that control flow is the result of data flow, and now there's more data to flow to lead to such control, resulting in significant overhead for control transfer, moving data around (often needlessly) just to get the right part of the program to "light up" with activity/control. Can't we manipulate the control and the data separately, but let them both operate along the same paths when convenient?

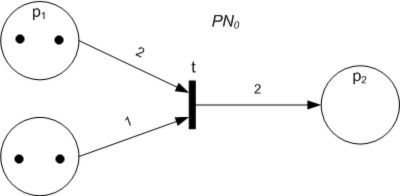

That was the jumping off point of my work from my grad school advisor's (R. Babb) work on Large Grain Data Flow (LGDF). Its basis looks something like a cross between a Petri Net

and a bunch of finite state automata -- each lending themselves to visual representation -- or, if you like, a network of Turing Machines passing tapes around. Through the years, the concepts have evolved, as has the name -- from LGDF2, to F-Nets, to Software Cabling, and now to ScalPL (Scalable Planning Language). All of them have been based on a similar simple circles-lines-boxes visual representation. In my new book, I suggest that the principles and uses extend well beyond the computer programming field, to almost any kind of concurrent planning, but that in the parallel/concurrent programming world, it is the logical extension of structured programming.

and a bunch of finite state automata -- each lending themselves to visual representation -- or, if you like, a network of Turing Machines passing tapes around. Through the years, the concepts have evolved, as has the name -- from LGDF2, to F-Nets, to Software Cabling, and now to ScalPL (Scalable Planning Language). All of them have been based on a similar simple circles-lines-boxes visual representation. In my new book, I suggest that the principles and uses extend well beyond the computer programming field, to almost any kind of concurrent planning, but that in the parallel/concurrent programming world, it is the logical extension of structured programming.And, yes, I believe ScalPL is a representation which is simple and intuitive enough to use when you step up to that whiteboard and wish to convey the relationships between several activities working together, especially if you've got a handful of different-colored markers. But for now, even just using it for parallel and concurrent programming will be a big step forward. What is the link between diagrams and concurrency? For another time...